ChatGP-5 Really Insists That There Are Three B's in the Word Blueberry

Counting the number of B's in blueberry is harder than it seems.

Published Aug. 8 2025, 10:12 a.m. ET

OpenAI has debuted ChatGPT-5, the latest iteration of its chatbot that first took the world by storm a few years ago. While that new upgrade might be exciting, and does come with some upgrades in areas like coding, health, and writing, that doesn't mean that this new model is perfect.

Almost immediately, users offered the bot a relatively simple task. They asked it to count the number of B's in the word blueberry, and the results were perhaps not what you might expect. Here's what we know about what ChatGPT-5 said.

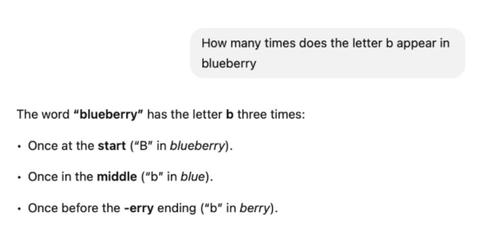

ChatGPT-5 gives some users incorrect results about the word blueberry.

According to several different users on Bluesky, ChatGPT-5 was quite insistent that the word blueberry has three B's in it. One user named Kieran Healy posted screenshots of his conversation with the bot in which he attempted to correct it, and it simply doubled down on the number of B's in blueberry, insisting that there were three. It even explained that there were B's in positions "1, 5, and 7," offering specific placements of the B's in the word.

For what it's worth, this issue seems to have already been corrected. When I tested this out myself, ChatGPT told me that there were just two B's in the word (which, if you're keeping track at home, is the correct number).

In Healy's conversation with the bot, the bot said that there was a "double-b moment" in the middle of the word, clearly hallucinating about how the word is spelled.

It's worth noting, too, that ChatGPT was spelling the word itself correctly, but simply suggesting that there was some sort of phantom B that wasn't even in its own spelling.

"Blueberry is one of those words where the middle almost trips you up," ChatGPT wrote in explaining its insistence on the number of B's. "The little bb moment is satisfying, though — it makes the word feel extra bouncy."

Healy's conversation was not an isolated incident. Bluesky user Peter Thal Larsen had a similar conversation, and ChatGPT 5 again insisted that there were three B's in the word.

These kinds of hallucinations are always a part of these chatbots, and they're one of the reasons that you're not supposed to rely on ChatGPT or other chatbots to assert facts on your behalf.

Because the number of B's in blueberry is so self-evident, though, it's also an example of why so many people don't trust these chatbots to begin with. If it can make the kind of error that your average 8-year-old might be able to catch, it probably shouldn't be a tool you rely on for solving complex equations or doing other more complicated tasks.

OpenAI's advocates would say that, because ChatGPT does not operate like human intelligence, the fact that it makes simple errors does not necessarily mean that it will make more complex ones. Still, it's hard to be too reliant on a tool that makes errors that almost anyone can catch.