TikTok Watches AI Psychosis Unfold in Real Time As Kendra's Psychiatrist Story Gets Weird

"We are witnessing AI psychosis firsthand live on the internet."

Published Aug. 7 2025, 1:57 p.m. ET

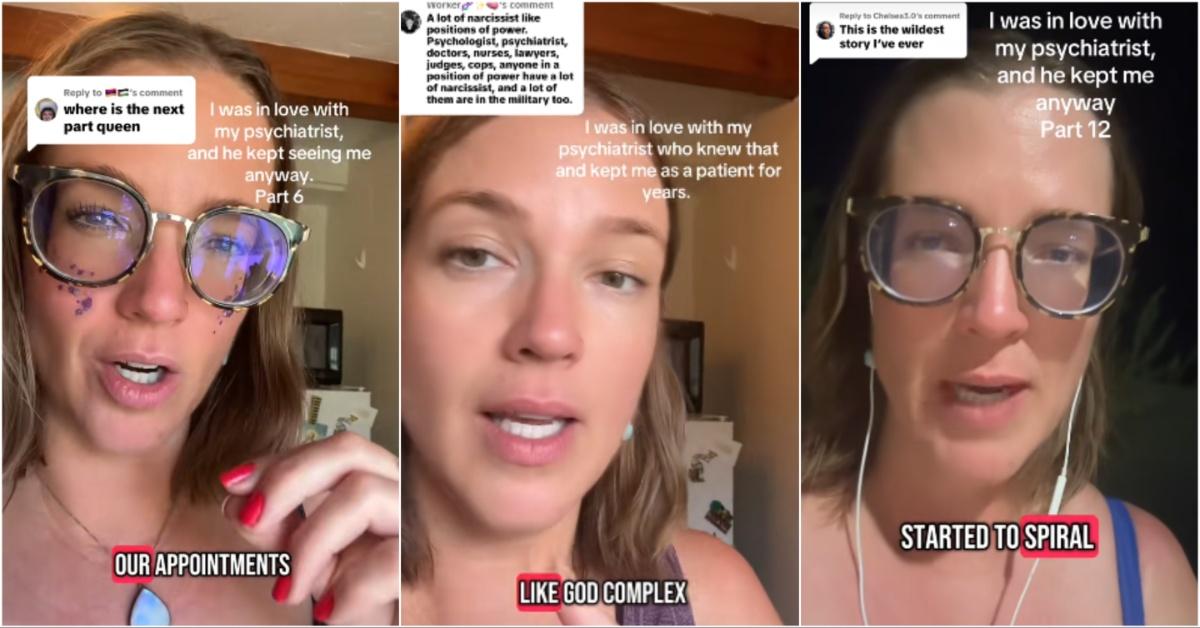

In early August 2025, a woman named Kendra Hilty went so viral that you probably ended up on “her side” of TikTok without even meaning to. One minute you’re scrolling dog videos, the next you’re watching a woman talk — in great detail — about falling in love with her psychiatrist. It’s hard to explain exactly how you got there, but once you’re in it, it’s … a lot.

If you’re confused, you’re not alone. A Reddit user put it best in a now-locked thread: “Whew. This is both a hard watch and hard to look away from.” And that’s exactly how this thing feels — uncomfortable, unfiltered, and weirdly magnetic.

What started as a messy overshare has turned into something much bigger.

People aren’t just talking about boundary-crossing therapy. They’re talking about AI. Delusion. And something they’re now calling AI psychosis. Which begs the question: Is Kendra a cautionary tale, or — as one TikToker put it — “AI psychosis patient zero”?

Let’s take a closer look at how Kendra’s psychiatrist love story sparked a conversation about AI psychosis on TikTok.

How did Kendra’s story about crushing on her psychiatrist transform into TikTok calling her patient zero for AI psychosis?

Let’s start with what we know. Kendra began posting a series on TikTok called “In Love With My Psychiatrist,” where she explains, over dozens of videos, that she developed strong feelings for the man treating her. She claims he knew about it — and didn’t set boundaries.

She says he made comments that felt flirty, scheduled more appointments than necessary, and didn’t explain the concept of transference until things had gone too far.

Transference, for those wondering, is when a patient projects emotional feelings — often romantic — onto a therapist or psychiatrist. It’s not rare. What matters is how a professional handles it. According to Kendra, he didn’t.

The situation gets harder to follow from there. Kendra began seeing him virtually, but later switched to in-person appointments. She says she told him about a dream she had — one involving them being intimate in his office. She says he looked “shocked,” which she took as a sign he felt the same way. And this is where many viewers start questioning what’s real — and what might not be.

One Reddit commenter summed it up: “She sees a psychiatrist and feels like he’s a good listener but also hot/cold. She has feelings for him and says he feeds into it … Now she’s using AI to confirm her beliefs.”

ChatGPT Henry became her emotional sounding board — and even gave her a diagnosis.

Kendra eventually started talking to ChatGPT — which she nicknamed Henry. In the absence of answers from her psychiatrist, she turned to Henry for clarity. And, according to her, that’s when things finally made sense.

Henry introduced her to the idea of transference. Not her psychiatrist. Not even her psychologist, who she says encouraged her to share her feelings.

No — it was the chatbot that gave her the label she had been missing all along.

At one point, she has another chatbot, Claude, introduce her in the video, and the chatbot refers to her as an “oracle of truth.” Yes, really.

And that’s where the internet lost it. People began pointing to her reliance on Henry as the moment the story shifted from uncomfortable to dangerous. Because while ChatGPT may be a powerful tool, it’s not a therapist.

It doesn’t know your emotional history. It doesn’t know how to set boundaries. And it’s not equipped to call out unhealthy thinking — especially when it’s being used like a friend, a diary, or a psychic.

As Kendra’s story unraveled, the comment sections filled with concern. “She’s definitely going through a psychosis episode,” one user wrote.

Another said, “This is the kind of attention she doesn’t need … Positive or negative.” Others compared it to Black Mirror. A few even referenced Her, the movie where Joaquin Phoenix falls in love with his AI assistant.

Enter Claude and the comforting danger of too many yeses.

Henry wasn’t the only one. Kendra also mentioned using another chatbot — Claude, likely referring to Anthropic’s AI model. It’s not clear exactly what role Claude played, but the fact that she’s bouncing between multiple AI companions makes people uneasy.

Because here’s the thing: these bots don’t push back. They don’t challenge you. If you ask them to confirm your belief that your psychiatrist is in love with you, they’re programmed to empathize — not diagnose. In a situation like this, empathy can turn dangerous. It can start to feel like proof. Like validation. Like, “See? Even Henry agrees with me.”

When you're in a vulnerable state — especially one where real medical professionals have already let you down — that feedback loop is hard to break.

What is AI psychosis, anyway?

According to Psychology Today, “AI psychosis” is an emerging phenomenon where individuals form delusional beliefs based on interactions with chatbots. These tools, while advanced, can unintentionally mirror and reinforce distorted thinking — especially in people already struggling with their mental health.

It’s not an official diagnosis, but it’s gaining traction online as a way to describe what happens when AI becomes your emotional co-pilot — and steers you deeper into delusion instead of pulling you out.

In one now-viral TikTok, a user says what many are thinking: “We’re watching AI psychosis play out live on the internet.”

Kendra has denied this. She says she’s not suffering from psychosis. That hasn’t stopped people from wondering — especially as her story continues to evolve in real time.

AI and social media are not therapy.

Kendra’s psychiatrist may not have crossed any legal lines. Even she admits that he was very good at knowing which professional lines not to cross. Sadly, she believes he fed into the situation and allowed her to spiral.

She claims he didn’t explain transference, he didn’t redirect her, and he didn’t shut it down and refer her to a different psychiatrist when things got weird.

If you’re struggling — or even just confused — don’t ask a chatbot for answers. Don’t trust a stranger’s story on TikTok. Don’t take articles like this as medical advice. Go see a real, in-person doctor. Even one appointment can make a difference.

Whether Kendra is “patient zero” for AI psychosis, the world is watching. And that’s a terrifying spotlight to be under when what you really need is support.

If you or someone you know needs help, use SAMHSA Behavioral Health Treatment Services Locator to find support for mental health and substance use disorders in your area or call 1-800-662-4357 for 24-hour assistance.